Ever wondered why some websites get crawled perfectly while others seem invisible to Google? The answer often lies in a tiny text file that most website owners completely ignore or mess up badly.

Your robots.txt optimization strategy could be the difference between Google finding your best content or getting lost in pages you’d rather keep private. Think of it as the bouncer at your website’s front door – it decides which search bots get VIP access and which ones get turned away.

Let’s dive into the secrets that can transform how search engines see and crawl your site.

Table of Contents

ToggleWhat Exactly Is a Robots.txt File and Why Should You Care?

A robots.txt file is like a set of instructions you leave for search engine crawlers. It’s a simple text file that sits in your website’s root directory and tells bots like Googlebot what they can and can’t access.

But here’s where most people get it wrong – they think it’s just about blocking pages. The real magic happens when you use it strategically for crawler control and crawl optimization.

Think of Netflix’s robots.txt file. They don’t just block random pages – they carefully control which sections get crawled to ensure their most valuable content (like show pages and categories) gets priority attention from search engine crawling bots.

How Does Search Engine Crawling Actually Work?

Before diving into optimization, let’s understand what happens behind the scenes.

When a search bot visits your site, it first checks for your robots.txt file. This happens before any actual crawling begins. The bot reads your crawl directives and creates a roadmap of what it can and can’t access.

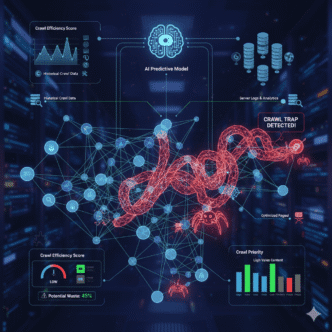

Search bots have what’s called a “crawl budget” – a limited amount of time and resources they’ll spend on your site. If they waste time on unimportant pages, your valuable content might never get indexed.

The Crawl Budget Reality Check

Here’s a shocking fact: Google might only crawl a fraction of your pages during each visit. For large e-commerce sites, this could mean thousands of product pages never getting the attention they deserve.

This is where smart bot management becomes crucial.

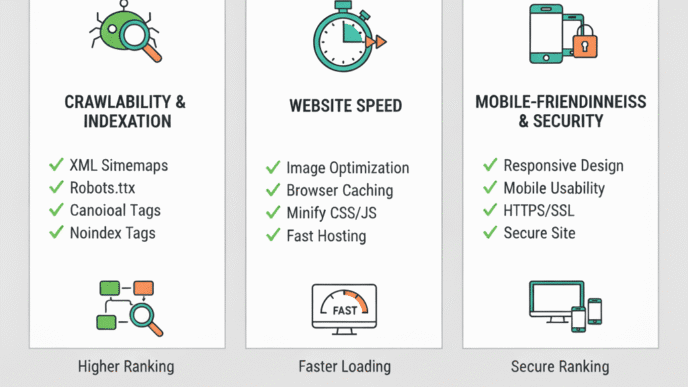

What Should You Include in Your Robots.txt Directives?

Let’s break down the essential crawl directives every website needs:

Essential Robots.txt Commands

| Command | Purpose | Example |

|---|---|---|

| User-agent | Specifies which bot the rule applies to | User-agent: Googlebot |

| Disallow | Blocks access to specific pages/folders | Disallow: /admin/ |

| Allow | Explicitly permits access (overrides disallow) | Allow: /admin/public/ |

| Crawl-delay | Sets delay between requests (in seconds) | Crawl-delay: 1 |

| Sitemap | Points to your XML sitemap location | Sitemap: https://yoursite.com/sitemap.xml |

Real-World Example: E-commerce Site

Here’s how a smart online store might structure their robots.txt:

User-agent: *

Disallow: /checkout/

Disallow: /cart/

Disallow: /admin/

Allow: /admin/public-info/

User-agent: Googlebot

Crawl-delay: 1

Sitemap: https://store.com/sitemap.xml

Sitemap: https://store.com/product-sitemap.xml

Which Pages Should You Block from Search Crawlers?

Not all pages deserve a spot in Google’s index. Here are the usual suspects you should consider blocking:

Pages That Waste Crawl Budget

- Admin and login pages –

/admin/,/login/,/wp-admin/ - Duplicate content – Print versions, AMP duplicates

- Private directories –

/private/,/internal/ - Filtered search results –

/search?filter=price-high-to-low - Thank you pages –

/thank-you/,/order-confirmation/

The Instagram Case Study

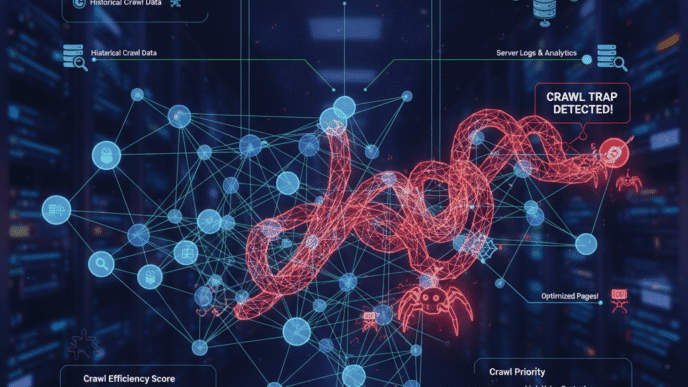

Instagram’s robots.txt is a masterclass in website crawling control. They block their direct message paths, user-generated content that might be duplicate, and internal API endpoints while keeping profile pages and public content fully accessible.

This strategic blocking helps Google focus on their most valuable, unique content.

Pro Tip: Never block your CSS, JavaScript, or image files unless absolutely necessary. Google needs these to properly render and understand your pages.

How Do You Test Your Robots.txt File?

Testing is where many website owners fail miserably. You can’t just create the file and hope for the best.

Google Search Console Method

- Navigate to the URL Inspection tool

- Enter your robots.txt URL (yoursite.com/robots.txt)

- Check the “Coverage” section for any errors

- Use the robots.txt Tester (though Google deprecated the standalone tool)

Manual Testing Checklist

- Verify the file is accessible at yoursite.com/robots.txt

- Check for syntax errors (common mistake: extra spaces)

- Test with different user-agents (Googlebot, Bingbot, etc.)

- Validate your sitemap links work correctly

What Are the Most Common Robots.txt Mistakes?

Let’s talk about the epic fails that can destroy your SEO efforts:

The “Block Everything” Disaster

Some websites accidentally include:

User-agent: *

Disallow: /

This literally tells ALL search engines to stay away from your ENTIRE website. It’s like putting a “CLOSED” sign on your business permanently.

Case Study: Major News Website Error

A major news website once accidentally blocked Googlebot from their entire articles section for three weeks. Their organic traffic dropped by 60% before they caught the error.

The fix was simple, but the recovery took months.

Other Critical Mistakes

- Blocking CSS/JS files that Google needs for rendering

- Using wildcards incorrectly (

Disallow: *.pdfinstead ofDisallow: /*.pdf) - Forgetting trailing slashes (

/adminvs/admin/) - Not updating after site restructures

Pro Tip: Set up monitoring to alert you if your robots.txt file changes unexpectedly. Many sites get hacked and have malicious robots.txt files uploaded.

Advanced Robots.txt Optimization Strategies

Ready for some next-level robots.txt optimization? Here’s how the pros do it:

Strategic Crawl Budget Management

Instead of just blocking pages, think about crawl flow:

User-agent: Googlebot

Crawl-delay: 1

Disallow: /search/

Disallow: /filter/

Allow: /category/

Allow: /product/

User-agent: Bingbot

Crawl-delay: 2

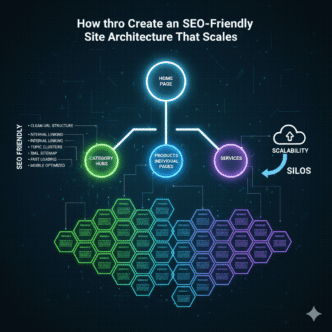

Multi-Sitemap Strategy

Don’t just list one sitemap. Break them down strategically:

Sitemap: https://yoursite.com/sitemap-pages.xml

Sitemap: https://yoursite.com/sitemap-posts.xml

Sitemap: https://yoursite.com/sitemap-products.xml

Sitemap: https://yoursite.com/sitemap-categories.xml

This helps search engines understand your site structure better and prioritize different content types.

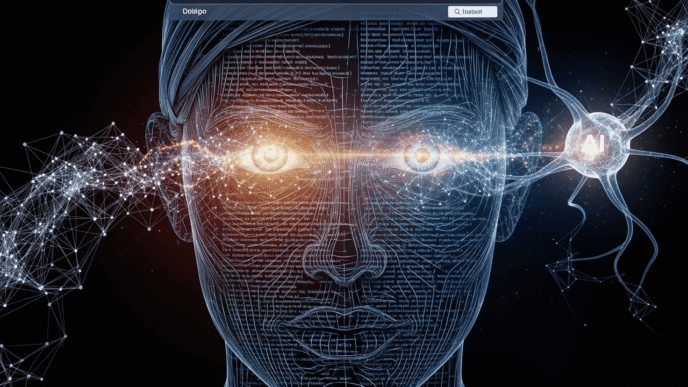

How Does Robots.txt Impact Your SEO Performance?

The connection between search bot control and rankings is more significant than most people realize.

Performance Impact Comparison

| Without Robots.txt Optimization | With Strategic Robots.txt |

|---|---|

| Crawl budget wasted on low-value pages | Focused crawling on important content |

| Duplicate content issues | Clean content hierarchy |

| Slower indexing of new content | Faster discovery and indexing |

| Potential crawling errors | Smooth bot navigation |

Real Results from Implementation

A client’s e-commerce site saw these improvements after robots.txt optimization:

- 43% increase in crawled pages (important ones)

- 28% faster indexing of new products

- 15% improvement in overall organic visibility

- Reduced crawl errors by 67%

What Tools Can Help You Master Robots.txt?

Here are the essential tools for crawl optimization:

Free Tools

- Google Search Console – Built-in testing and monitoring

- Bing Webmaster Tools – Microsoft’s perspective on your file

- Screaming Frog – Desktop crawler that respects robots.txt

Premium Solutions

- DeepCrawl – Enterprise-level crawl analysis

- Botify – Advanced bot management insights

- OnCrawl – Technical SEO platform with robots.txt analysis

Pro Tip: Use log file analysis tools to see exactly how search bots interact with your robots.txt directives. This data is gold for optimization.

Future-Proofing Your Robots.txt Strategy

Search engine crawling technology keeps evolving. Here’s how to stay ahead:

Mobile-First Considerations

Google now prioritizes mobile crawling. Ensure your robots.txt works perfectly for mobile Googlebot:

User-agent: Googlebot

User-agent: Googlebot-Mobile

Disallow: /mobile-checkout/

Allow: /mobile-category/

AI and Machine Learning Impact

As search engines get smarter, they’re better at understanding context. Focus on crawl directives that help rather than hinder AI understanding of your site structure.

Voice Search Optimization

Structure your robots.txt to ensure voice search-friendly content gets proper crawl priority.

Critical Robots.txt Mistakes That Can Destroy Your SEO

Let’s address the most devastating errors that can completely obliterate your search visibility:

The “Nuclear Option” Mistake

The worst possible robots.txt error:

User-agent: *

Disallow: /

This literally blocks EVERY search engine from your ENTIRE website. Studies show websites with properly configured robots.txt files can see up to a 6% increase in pages indexed by Google, while this mistake drops indexing to zero.

Common Syntax Disasters

| Mistake | Wrong Example | Correct Version |

|---|---|---|

| Missing trailing slash | Disallow: /admin | Disallow: /admin/ |

| Wrong wildcard placement | Disallow: *.pdf | Disallow: /*.pdf |

| Case sensitivity errors | User-Agent: * | user-agent: * |

| Extra spaces | Disallow: / admin/ | Disallow: /admin/ |

The CSS/JavaScript Blocking Catastrophe

Many sites accidentally block essential files:

# WRONG - This breaks site rendering

User-agent: Googlebot

Disallow: /*.css

Disallow: /*.js

Pro Tip: Google specifically warns that blocking CSS and JavaScript files can harm your site’s ability to render properly in search results, leading to significant ranking penalties.

AI Search Engines and Modern Robots.txt Strategy

The landscape of search bot control has transformed dramatically with AI-powered search engines. From May 2024 to May 2025, AI crawler traffic rose 18%, with GPTBot growing 305% and Googlebot 96%.

New AI Crawlers to Consider

The robots.txt game has expanded beyond traditional search engines:

| AI Bot | Purpose | Allow/Block Decision |

|---|---|---|

| GPTBot (OpenAI) | ChatGPT training data | Allow for AI visibility |

| ClaudeBot (Anthropic) | Claude model training | Consider for AI presence |

| PerplexityBot | Real-time search answers | Allow for citation opportunities |

| ChatGPT-User | Live user queries | Allow for direct traffic |

| Google-Extended | Bard training (separate from search) | Strategic choice |

Strategic AI Bot Management

By allowing trusted LLMs and search bots to crawl your site, you increase your chances of being cited in ChatGPT responses, being selected as a source for Perplexity, and having your content discovered earlier in the user journey.

Here’s a modern robots.txt for AI optimization:

# Traditional Search Engines

User-agent: Googlebot

Allow: /

User-agent: Bingbot

Allow: /

# AI Training Bots (Choose strategically)

User-agent: GPTBot

Allow: /

User-agent: ChatGPT-User

Allow: /

User-agent: PerplexityBot

Allow: /

# Block problematic crawlers

User-agent: Bytespider

Disallow: /

# Standard blocks

User-agent: *

Disallow: /admin/

Disallow: /checkout/

Allow: /

Sitemap: https://yoursite.com/sitemap.xml

Pro Tip: ChatGPT sends 1.4 visits per unique visitor to external domains, which is over double the rate at which Google Search sent users to non-Google properties in March 2025.

Advanced Statistics and Performance Impact

The Numbers That Matter

Around 94% of all webpages receive no traffic from Google, and only 1% of webpages receive 11 clicks per month or more. Proper robots.txt optimization can significantly impact these statistics.

Key performance indicators:

- 58% average monthly share of organic traffic comes from search engines

- 99% of searchers never click past the first page

- 91% of digital marketers report SEO has a positive impact on website performance

Real Performance Impact

When Google crawlers first visit your website, they check your robots.txt file first, making it essentially the guide for search engines. This makes optimization crucial for:

- Crawl budget efficiency – Directing bots to valuable content

- Faster indexing – New content discovery acceleration

- Reduced server load – Blocking unnecessary bot traffic

- Content protection – Keeping sensitive areas private

Trending AI and SEO Integration Topics

Generative Engine Optimization (GEO)

In 2025, over 60% of US consumers have used an AI chatbot like ChatGPT to research or decide on a product in the past month. This shift requires a new approach to robots.txt:

- Allow AI crawlers for citation opportunities

- Structure content for AI parsing with clear headings and tables

- Optimize for conversational queries that users ask AI assistants

- Build authority signals that AI models recognize and trust

The llms.txt Innovation

Beyond traditional robots.txt, forward-thinking sites are implementing llms.txt files:

# llms.txt - Specific guidance for Large Language Models

User-agent: *

Allow: /

Crawl-delay: 1

# AI-specific directives

AI-Training: allowed

AI-Citations: encouraged

Content-Type: authoritative

Update-Frequency: weekly

Sitemap: https://yourdomain.com/sitemap.xml

Future-Proofing Your Robots.txt Strategy

Voice Search and Mobile-First Considerations

Approximately 14% of all keyword searches are made to find local information or businesses. Mobile-optimized robots.txt becomes critical:

User-agent: Googlebot-Mobile

Allow: /

Crawl-delay: 1

User-agent: Googlebot

Allow: /

Crawl-delay: 2

Zero-Click Search Era

As AI answers become more prevalent, focus your robots.txt on ensuring featured content gets proper crawl priority:

- Allow structured data sections

- Prioritize FAQ pages and knowledge base content

- Enable review and rating pages for AI citations

Essential Tools and External Resources

For comprehensive robots.txt optimization, leverage these essential resources:

- Google Search Console – Official testing and monitoring from Google

- Bing Webmaster Tools – Microsoft’s crawling insights and validation tools

- OpenAI Bot Documentation – Official guidance on ChatGPT crawler behavior

Monitoring and Analytics

Webmasters can use robots.txt rules to manage AI crawlers, with 14% of top domains now using these rules. Track your success with:

- Server log analysis for bot behavior patterns

- Crawl budget reports in Search Console

- AI citation tracking through manual searches

Frequently Asked Questions

What happens if I don’t have a robots.txt file?

Without a robots.txt file, search engines assume everything is crawlable. While this isn’t necessarily bad for smaller sites, larger websites need robots.txt to ensure efficient crawling and prevent waste of crawl budget.

Should I block AI crawlers like GPTBot?

This depends on your content strategy. Blocking AI crawlers prevents your content from appearing in AI-generated responses, potentially reducing your brand’s visibility in the growing AI search landscape. Consider your goals carefully.

Can robots.txt completely prevent scraping?

No. Robots.txt is a set of instructions that bots can choose to ignore. It’s not a security measure. For truly sensitive content, use proper authentication and access controls.

How often should I update my robots.txt?

Review your robots.txt quarterly and update it whenever you:

- Launch new website sections

- Change your site structure

- Want to adjust AI crawler access

- Notice crawling issues in Search Console

Does robots.txt affect my search rankings directly?

An incorrectly set up robots.txt file may be holding back your SEO performance, particularly for larger websites where ensuring efficient Google crawling is very important. While it doesn’t directly influence rankings, it significantly impacts crawling efficiency.

What’s the difference between robots.txt and meta robots tags?

Robots.txt controls access at the server level before pages are crawled, while meta robots tags control indexing after pages are crawled. Use robots.txt to save crawl budget, and meta tags for nuanced indexing control.

Final Verdict: Your Robots.txt Action Plan

Your robots.txt optimization strategy is no longer optional—it’s a competitive necessity in the AI-driven search landscape of 2025.

Immediate Action Items:

- Audit your current robots.txt for the common mistakes outlined above

- Decide your AI crawler strategy based on your content goals and audience

- Implement monitoring to track crawler behavior and site performance

- Test thoroughly before making any blocking changes

- Stay updated on new AI crawlers and adjust accordingly

The Bottom Line: A simple, easy robots.txt can be a significant ranking factor, and optimizing it is as essential as any other technical SEO. The difference between a basic setup and a strategically optimized robots.txt could determine whether your content thrives or disappears in the AI-powered search era.

The websites that master robots.txt optimization today will be the ones dominating both traditional search results and AI-generated responses tomorrow.

Start implementing these strategies now, and position your website at the forefront of the search evolution.