You type “best pizza NYC.” You speak “Hey Google, where can I get the best pizza delivered to my apartment in Manhattan right now?” Same intent. Completely different language. And that difference is costing you customers.

Natural language patterns voice search represents the fundamental shift from keyword-based search to human conversation. Understanding how people actually speak to devices isn’t just interesting linguistics—it’s the difference between appearing in voice results and being completely invisible.

This guide reveals the psychology, patterns, and practical applications of conversational search behavior so you can optimize for the way humans naturally communicate.

Table of Contents

ToggleWhat Are Natural Language Patterns in Voice Search?

Natural language patterns refer to the conversational structures, grammatical conventions, and linguistic habits people use when speaking to voice assistants. Unlike typed queries, spoken searches mirror actual human conversation.

When someone types, they economize. Short phrases. Keyword fragments. Minimal grammar. When they speak, they use complete sentences with proper structure, context, and conversational markers.

According to Backlinko’s comprehensive voice search study, the average voice search query contains 29 words compared to just 2-3 words for text searches. This 10x difference fundamentally changes optimization strategy.

Conversational search patterns include question words (who, what, when, where, why, how), polite language (“please,” “thank you”), location context (“near me,” “closest”), and temporal references (“right now,” “today,” “tomorrow”).

Why Do People Speak Differently Than They Type?

The cognitive load of typing forces brevity. Every character requires deliberate action—selecting, pressing, correcting. This friction creates evolved shorthand: “weather boston,” “cheap hotels miami,” “pizza delivery.”

Speaking requires no such economy. Words flow naturally without physical effort. We’ve spent our entire lives speaking in complete sentences but only decades typing abbreviated queries.

Spoken search behavior also includes social conditioning. We’re taught from childhood to speak politely, use complete sentences, and provide context. These habits persist when talking to devices even though Alexa doesn’t actually care about manners.

According to PwC’s consumer intelligence research, 71% of people prefer conducting queries by voice over typing when hands-free capability matters. This preference reveals voice as the more natural interface matching human communication patterns.

What Question Patterns Dominate Voice Search?

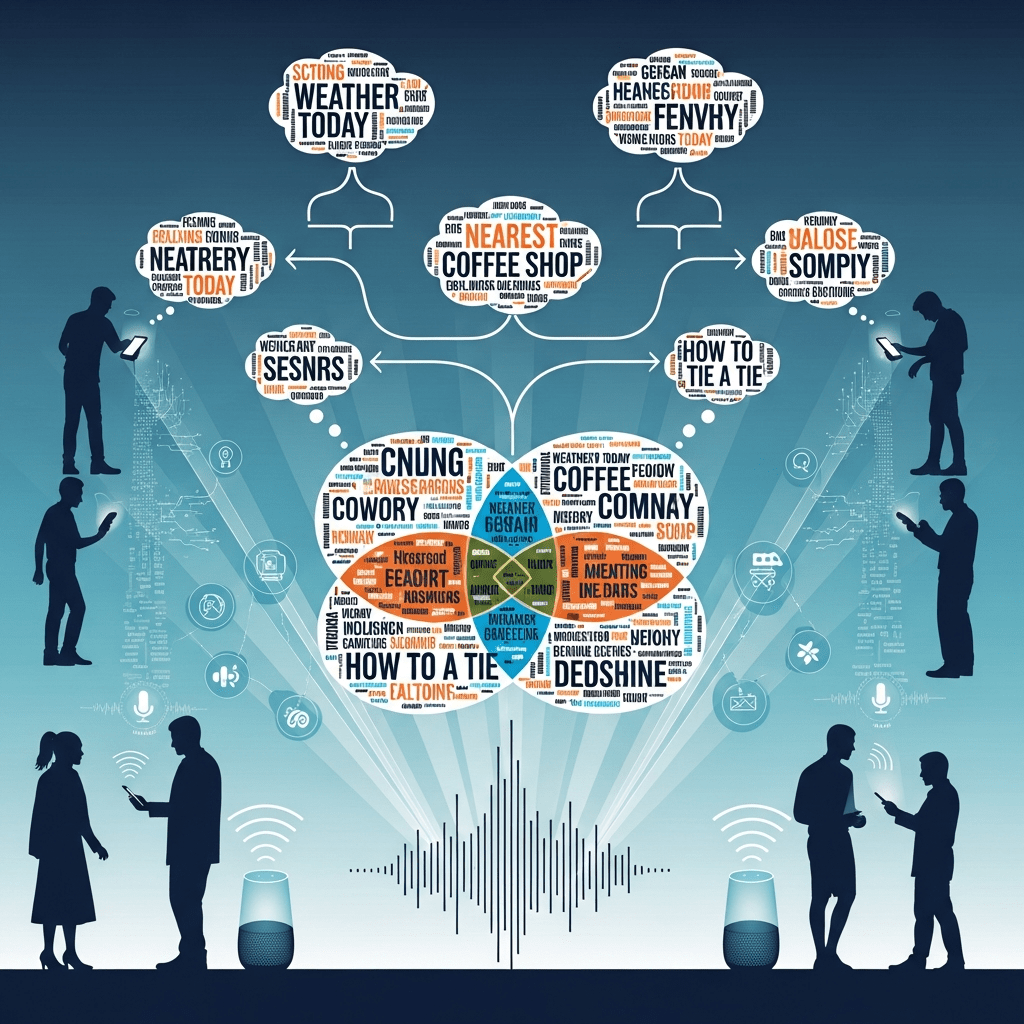

Questions drive voice search overwhelmingly. Moz research indicates that 14.1% of all Google searches use question modifiers, but this percentage jumps dramatically higher for voice queries.

The Six Essential Question Types

Who Questions: Identity, attribution, and person-related queries

- “Who is the CEO of Tesla?”

- “Who invented the telephone?”

- “Who can fix my air conditioner near me?”

What Questions: Definitions, explanations, and information

- What is voice search optimization?”

- “What causes headaches?”

- “What’s the best restaurant in Brooklyn?”

When Questions: Timing, schedules, and temporal information

- “When does the store close?”

- “When is the best time to plant tomatoes?”

- “When does the next train arrive?”

Where Questions: Location, directions, and geography

- “Where is the nearest coffee shop?”

- “Where can I buy organic vegetables?”

- “Where should I visit in Paris?”

Why Questions: Reasons, causes, and explanations

- “Why is my internet so slow?”

- “Why do leaves change color?”

- Why should I use voice search optimization?”

How Questions: Processes, methods, and instructions

- “How do I change a tire?”

- “How much does it cost to install solar panels?”

- “How long does shipping take?”

These six patterns account for the vast majority of informational voice queries. Content structured around answering specific questions captures voice search traffic systematically.

For comprehensive question-based optimization strategies, see our complete voice search guide.

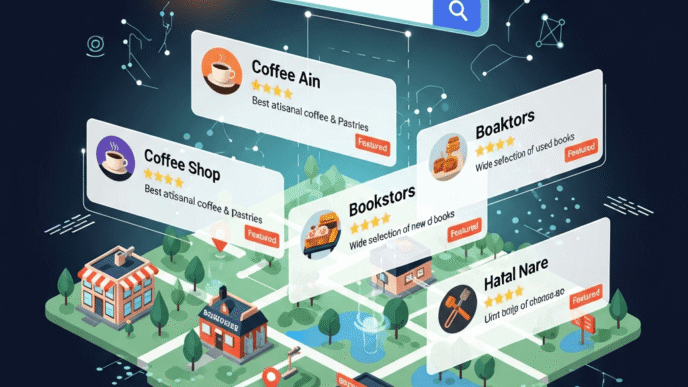

How Do Location Patterns Affect Voice Search Queries?

Local intent permeates voice search. Mobile usage (where most voice searches happen) correlates directly with immediate, location-based needs.

“Near Me” Query Explosion

“Near me” searches grew 900% over two years according to Google’s data. Voice amplifies this trend—speaking “near me” feels natural while typing it seems redundant.

Common location patterns:

- “Near me” / “Nearby” / “Close by”

- “In [neighborhood/city]”

- “Around [landmark]”

- “On my way to [destination]”

- “Between [location A] and [location B]”

People also ask location questions implicitly: “Where’s the best sushi?” assumes “near my current location” without stating it explicitly.

Temporal + Location Combinations

Voice searches frequently combine location with time: “What restaurants are open now near me?” This dual specificity demands real-time data accuracy.

Temporal location patterns:

- “Open now”

- “Open late”

- “24 hours”

- “Delivery available”

- “Shortest wait time”

BrightLocal research shows 58% of consumers used voice search to find local business information, making location optimization critical for most businesses.

What Role Does Intent Play in Natural Language Voice Patterns?

Search intent manifests more clearly in voice queries because natural language provides context absent from keywords.

The Three Primary Intent Types

Informational Intent: Seeking knowledge or answers

- “How does photosynthesis work?”

- “What causes inflation?”

- “Why do cats purr?”

Voice queries for information tend to be longer, more specific, and explicitly question-formatted.

Navigational Intent: Finding specific websites or locations

- “Take me to Starbucks”

- “Find Apple store hours”

- “Navigate to 123 Main Street”

Navigational voice searches often use command language: “take me,” “find,” “show me,” “navigate to.

Transactional Intent: Ready to purchase or complete action

- “Order pizza from Domino’s”

- “Book a haircut appointment”

- “Buy wireless headphones”

Transactional patterns include action verbs: “order,” “buy,” “book,” “reserve,” “schedule,” “purchase.”

According to Search Engine Journal analysis, transactional voice queries convert 3x higher than informational queries despite being less common. High intent drives high value.

How Do Conversational Markers Differ in Voice Search?

How people use voice search includes conversational elements rarely appearing in typed queries.

Politeness and Social Language

People say “please” and “thank you” to voice assistants despite knowing they’re machines. Social conditioning runs deep.

Common polite patterns:

- “Hey Google, can you please…”

- “Alexa, would you…”

- “Siri, I need help with…”

- “Thank you” (after receiving answer)

While politeness doesn’t affect ranking, understanding these patterns reveals user psychology. People treat voice assistants more like conversational partners than search engines.

Contextual References

Voice searches often reference previous context or assume shared knowledge:

- “What about the red one?” (following previous query)

- “How much does that cost?” (after product inquiry)

- “Is it open now?” (referring to recently mentioned business)

Multi-turn conversations create optimization challenges. Content must answer not just primary questions but logical follow-ups.

Filler Words and Natural Speech

Spoken language includes verbal fillers absent from text:

- “Um,” “uh,” “like,” “you know”

- False starts and self-corrections

- Casual grammar and sentence fragments

Modern voice recognition filters most fillers, but natural language understanding must still interpret imperfect human speech.

For strategies handling conversational queries, explore our voice search optimization framework.

What Are the Most Common Voice Search Query Structures?

Analyzing conversational search patterns reveals predictable structures you can target systematically.

Complete Question Sentences

The dominant pattern: full grammatically correct questions.

Structure: [Question word] + [auxiliary verb] + [subject] + [main verb] + [object/complement]?

Examples:

- “How do I change a tire on my car?”

- “What is the best pizza place in Chicago?”

- “When does the pharmacy close today?”

This structure appears in 60%+ of informational voice queries according to voice search analysis.

Command Structures

Direct instructions to the assistant.

Structure: [Action verb] + [object] + [optional modifiers]

Examples:

- “Call Mom”

- “Set alarm for 7 AM”

- “Play jazz music”

- “Navigate to work”

Commands dominate transactional and navigational queries.

Comparative Questions

Seeking comparisons or evaluations.

Structure: [Question word] + [be verb] + [comparative adjective] + [options]

Examples:

- “Which is better, iPhone or Samsung?”

- “What’s cheaper, Uber or Lyft?”

- “Who has faster shipping, Amazon or Walmart?”

Comparison queries present opportunities for versus-style content and comparison tables.

Local + Attribute Combinations

Combining location with quality descriptors.

Structure: [Superlative/qualifier] + [business type] + [location marker]

Examples:

- “Best Italian restaurant near me”

- “Cheapest gas station around here”

- “Highest rated dentist in Boston”

This pattern drives massive local business search volume.

How Does Voice Search Handle Follow-Up Questions?

Modern assistants maintain conversation context across multiple turns. This creates new optimization opportunities and challenges.

Understanding Contextual Continuity

After asking “What’s the weather?” users might follow with “What about tomorrow?” The assistant understands “tomorrow’s weather” from context.

Common follow-up patterns:

- Requesting more detail: “Tell me more about that”

- Seeking alternatives: “What about other options?”

- Adding constraints: “How about vegetarian choices?”

- Clarifying confusion: “I meant the other location”

Content optimized for primary questions should naturally support logical follow-ups.

Pronoun and Ellipsis Usage

Voice conversations use pronouns referencing previous entities:

- “How much does it cost?” (it = previously mentioned item)

- “When are they open?” (they = previously discussed business)

- “Is she available?” (she = previously named person)

Traditional SEO content doesn’t account for pronoun references. Voice-optimized content must work both independently and within conversation threads.

What Regional and Demographic Differences Exist in Voice Patterns?

Voice query patterns vary by age, location, and cultural factors.

Age-Based Pattern Differences

Younger users (18-34): More casual language, heavy slang usage, faster speech Middle-aged users (35-54): Balanced formality, clear enunciation, complete sentences Older users (55+): More formal language, slower speech, polite markers

According to Pew Research data, 71% of smartphone users ages 18-29 use voice assistants compared to 49% of those 65+. Younger demographics drive voice search growth.

Regional Language Variations

American English, British English, Australian English, and other variants each have distinct patterns:

- Vocabulary differences: “Lift” vs “elevator,” “chemist” vs “pharmacy”

- Pronunciation variations: Affecting voice recognition accuracy

- Colloquialisms: Regional phrases and expressions

- Measurement systems: Miles vs kilometers, Fahrenheit vs Celsius

Voice assistants increasingly handle regional variations, but content should align with target market language patterns.

Cultural Politeness Norms

Some cultures emphasize formal politeness more than others:

- High-context cultures: More indirect questioning, elaborate politeness

- Low-context cultures: Direct questions, less ceremonial language

Understanding your audience’s cultural communication style improves content resonance.

How Can You Research Natural Language Patterns for Your Industry?

Discovering actual how people phrase voice search questions in your niche requires systematic research.

Mining “People Also Ask” Data

Google’s PAA boxes reveal real questions people ask. They’re gold mines for natural language patterns.

Research process:

- Search your core keywords

- Expand every PAA question to reveal more

- Document 50+ related questions

- Identify pattern commonalities

- Group by intent and structure

Tools like AlsoAsked visualize PAA relationships, revealing question clusters and natural progression paths.

Using AnswerThePublic

AnswerThePublic aggregates autocomplete data into question visualizations.

Enter your topic and receive hundreds of real questions organized by question word (who, what, when, where, why, how).

This tool surfaces actual search queries—the voice patterns people use.

Analyzing Search Console Query Data

Google Search Console reveals exact queries driving traffic. Filter for:

- Queries 7+ words long (likely voice)

- Queries containing question words

- Queries with conversational structure

- Mobile-only queries

These actual queries from your site reveal natural language patterns in your specific niche.

Customer Service Question Mining

Your support team fields questions daily. These represent natural language patterns customers use.

Process:

- Review support tickets and live chat transcripts

- Document common questions verbatim

- Identify recurring phrasing patterns

- Note vocabulary customers actually use

- Track seasonal question variations

Customer questions are voice queries in written form—the language is already conversational.

For advanced research techniques, see our complete voice optimization guide.

What Common Mistakes Do Businesses Make with Natural Language Optimization?

Understanding patterns isn’t enough—you must avoid optimization pitfalls that waste effort.

Writing for Robots Instead of Humans

Keyword-stuffed content sounds unnatural when read aloud. Voice assistants increasingly favor genuinely conversational content.

Poor: “Voice search optimization voice search strategies voice search tactics for businesses” Better: “How can businesses optimize their content for voice search? Start by understanding natural conversation patterns.”

Read your content aloud. If it sounds robotic or awkward spoken, rewrite it.

Ignoring Question Variations

One question topic has dozens of phrasing variations. Targeting just one misses the majority.

Example variations for “fixing a leaky faucet”:

- “How do I fix a leaky faucet?”

- “What’s the best way to repair a dripping tap?”

- “Can I stop my faucet from leaking myself?”

- “Why is my faucet dripping and how do I fix it?”

Create content answering the core question while naturally incorporating common variations.

Overlooking Intent Signals

Natural language reveals intent through word choice. “Best” suggests research phase. “Near me” indicates ready-to-buy. “How to” means DIY learning.

Match content depth and call-to-action to the intent signaled by natural language patterns.

Forgetting Mobile Context

Voice searches happen on-the-go. Someone asking “where’s the nearest coffee shop?” is literally walking around looking for one.

Content must acknowledge this context with immediate, actionable information. Long-winded explanations frustrate mobile voice users.

Using Industry Jargon

People speak conversationally, not technically. They ask “Why does my internet keep cutting out?” not “What causes intermittent connectivity disruptions?”

Use the language your customers actually speak, not industry terminology.

Pro Tip: According to Nielsen Norman Group research, voice interfaces require 7th-9th grade reading level content for optimal comprehension. Complex vocabulary and sentence structure reduce voice search visibility.

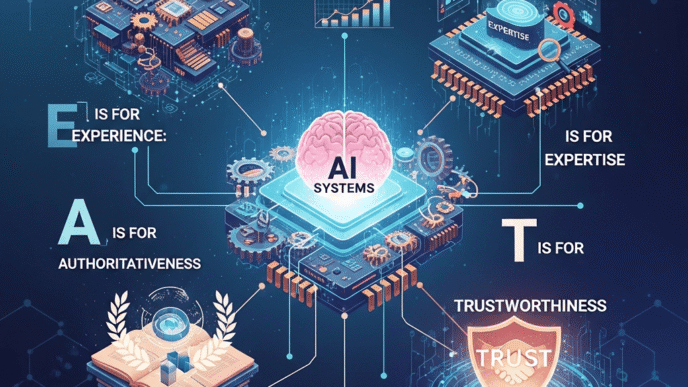

How Will AI and Machine Learning Change Natural Language Voice Patterns?

Understanding natural language patterns in voice search queries becomes more critical as AI advances.

Conversational AI Evolution

GPT-powered assistants understand context, nuance, and implied meaning better than keyword-based systems. This rewards genuinely helpful content over keyword optimization.

Google’s MUM and BERT updates specifically target natural language understanding. Future updates will continue this trajectory.

Multimodal Search Integration

Voice + visual search combinations create new pattern types:

- “What kind of plant is this?” (while showing camera)

- “Find me a dress like this in blue” (pointing at image)

- “How do I assemble this?” (showing product)

These multimodal patterns require optimization across both voice and visual channels.

Predictive and Proactive Assistants

AI assistants increasingly anticipate needs before users ask. This shifts patterns from reactive questions to proactive confirmations:

- “Do you want me to reorder your usual coffee?”

- “Should I add milk to your shopping list?”

- “Ready to start your morning routine?”

While brands can’t control these proactive suggestions directly, consistent positive experiences increase selection probability.

Explore emerging trends in our main voice search resource.

Real-World Examples of Natural Language Pattern Optimization

Domino’s analyzed their customer service calls to understand how people naturally order pizza by voice. They discovered patterns like “Can I get a…” and “I’d like to order…”

They built their voice ordering systems around these actual patterns rather than forcing customers to use specific command structures. The result: 65% of orders now come through digital channels with voice growing fastest.

Mayo Clinic studied how patients phrase health questions. They found people rarely use medical terminology—instead asking “Why does my head hurt?” not “What causes cephalalgia?”

They restructured their content using patient language, creating FAQ sections answering questions in natural conversational patterns. Voice search traffic increased 40% year-over-year.

Practical Framework for Implementing Natural Language Optimization

Transform pattern understanding into actionable optimization:

Step 1: Document Core Questions

- List 20-50 questions customers ask about your products/services

- Write them exactly as people speak them

- Group by topic and intent

Step 2: Identify Pattern Commonalities

- Which question words appear most frequently?

- What conversational markers do people use?

- How do they phrase location or time constraints?

Step 3: Create Conversational Content

- Structure content around natural questions as headings

- Answer in the first paragraph (40-60 words)

- Use conversational tone throughout

- Include related question variations

Step 4: Optimize Technical Elements

- Implement FAQ schema markup

- Ensure mobile page speed under 3 seconds

- Add speakable schema for key sections

- Structure with clear heading hierarchy

Step 5: Test and Iterate

- Monitor Search Console for voice-friendly queries

- Track featured snippet acquisition

- Analyze which patterns drive traffic

- Refine content based on performance

Frequently Asked Questions About Natural Language Voice Search Patterns

How can I identify which queries are voice searches vs typed searches?

Look for queries 7+ words long with conversational structure, question words, and complete sentences in Google Search Console. Mobile-only queries with local intent (“near me,” “open now”) likely represent voice. Analytics showing mobile Safari or Android device traffic with direct or organic source suggests voice usage, though precise attribution remains challenging.

What’s the most important natural language pattern to optimize for?

Question-format queries starting with who, what, when, where, why, or how represent the highest-value pattern. These questions have clear intent and appear most frequently in voice search. Create content with question headings followed by direct 40-60 word answers to capture these queries systematically.

Do voice assistants understand regional accents and dialects?

Modern voice recognition handles most regional accents well with 90%+ accuracy, though performance varies by accent strength and assistant platform. Google Assistant, Alexa, and Siri all continuously improve accent recognition. Optimize content for standard language patterns while acknowledging regional vocabulary differences where relevant.

How do I optimize for follow-up questions in multi-turn conversations?

Structure content to answer both primary questions and logical follow-ups. After explaining “what is [topic],” anticipate users asking “how does it work?” or “why does it matter?” Create comprehensive content supporting conversation progression naturally. Use clear internal linking so assistants can navigate to related answers.

Should I write content differently for voice search than traditional SEO?

Write in conversational tone using natural language, but don’t abandon SEO fundamentals. The best approach combines conversational structure (question headings, direct answers, natural flow) with technical optimization (schema markup, mobile speed, proper formatting). Voice and traditional SEO complement rather than conflict with each other.

How long should answers be for voice search optimization?

Featured snippet-optimized answers work best at 40-60 words—long enough to be useful but short enough for voice assistants to read aloud comfortably. Support brief answers with comprehensive deeper content below. Backlinko research shows average voice search results are 29 words, but surrounding content averages 2,312 words for context and authority.

Final Thoughts on Natural Language Voice Search Patterns

Understanding how people naturally speak to devices isn’t optional anymore. The 8.4 billion voice assistants worldwide respond to conversational language, not keyword fragments.

Natural language patterns reveal user psychology, intent, and needs more clearly than typed keywords ever did. Businesses optimizing for these patterns capture high-intent traffic competitors miss.

Start by documenting actual questions your customers ask. Use their language, not yours. Structure content conversationally. Answer directly and comprehensively.

The future of search is conversational. The winners will be businesses that speak their customers’ language naturally.

For platform-specific optimization tactics and comprehensive implementation strategies, explore our complete voice search optimization guide.

Citations & Sources

- Backlinko – “Voice Search SEO Study” – https://backlinko.com/voice-search-seo-study

- PwC – “Consumer Intelligence Series: Voice Assistants” – https://www.pwc.com/us/en/services/consulting/library/consumer-intelligence-series/voice-assistants.html

- Moz – “Voice Search SEO Guide” – https://moz.com/learn/seo/voice-search

- Think with Google – “Near Me Search Growth” – https://www.thinkwithgoogle.com/consumer-insights/consumer-trends/near-me-searches/

- BrightLocal – “Voice Search for Local Business Study” – https://www.brightlocal.com/research/voice-search-for-local-business-study/

- Search Engine Journal – “Voice Search Statistics & Insights” – https://www.searchenginejournal.com/voice-search-stats/

- Pew Research Center – “Mobile Technology Fact Sheet” – https://www.pewresearch.org/internet/fact-sheet/mobile/

- Nielsen Norman Group – “Voice User Interfaces” – https://www.nngroup.com/articles/voice-first/

- QSR Magazine – “Domino’s Voice Ordering Launch” – https://www.qsrmagazine.com/technology/dominos-launches-new-voice-ordering-through-google-assistant

- AlsoAsked – Question Research Tool – https://alsoasked.com/

🗣️ Natural Language Patterns in Voice Search

Data-Driven Analysis of Conversational Search Behavior 2024-2025

aiseojournal.netVoice vs Text Search Query Length Comparison

Data Source: Backlinko Voice Search SEO Study 2024

Question Word Distribution in Voice Queries

Data Source: Moz Voice Search Analysis & Search Engine Journal 2024

💡 Common Natural Language Patterns

Spoken: "What's the best pizza place in New York City?"

Spoken: "Where can I find a coffee shop near me right now?"

Spoken: "When does the pharmacy close today?"

Spoken: "How do I fix a leaky faucet myself?"

Spoken: "Why is my internet connection so slow today?"

Spoken: "Who is the current CEO of Tesla?"

🎯 Explore Voice Search Intent Types

📚 Informational Intent Patterns

Characteristics

Users seeking knowledge, definitions, or how-to information. These queries are longest and most conversational.

| Pattern Example | Avg Length |

|---|---|

| "How does photosynthesis work?" | 25-35 words |

| "What causes global warming?" | 20-30 words |

| "Why do leaves change color in fall?" | 30-40 words |

🧭 Navigational Intent Patterns

Characteristics

Users looking for specific websites, locations, or destinations. Often use command language.

| Pattern Example | Avg Length |

|---|---|

| "Take me to Starbucks" | 15-20 words |

| "Navigate to the nearest Apple Store" | 18-25 words |

| "Show me directions to work" | 12-18 words |

🛒 Transactional Intent Patterns

Characteristics

Users ready to purchase or complete actions. Include action verbs like "order," "buy," "book," "reserve."

| Pattern Example | Conversion Rate |

|---|---|

| "Order large pepperoni pizza from Domino's" | 3x higher |

| "Book haircut appointment for tomorrow" | 3x higher |

| "Buy wireless headphones under $100" | 3x higher |

📍 Local Intent Patterns

Characteristics

Location-based queries dominate voice search. 58% of consumers use voice for local business info.

| Pattern Example | Growth Rate |

|---|---|

| "Coffee shop near me open now" | 900% |

| "Best Italian restaurant nearby" | 900% |

| "Plumber available tonight in my area" | 900% |

Voice Search Accuracy by Platform (2024)

Data Source: Loup Ventures Voice Assistant IQ Test 2024

Natural Language Features in Voice Queries

Data compiled from Backlinko, Moz, and Search Engine Journal studies 2024

Visit aiseojournal.net for comprehensive SEO strategies, AI optimization guides, and voice search best practices.

Data sources: Backlinko Voice Search Study, Moz Voice Search Research, Google Think with Google, Search Engine Journal, PwC Consumer Intelligence, BrightLocal Local Search Study, Loup Ventures IQ Test, Pew Research Center (2023-2024 reports)