Millions of websites face unprecedented visibility challenges as AI crawlers fail to read JavaScript-dependent content

August 16, 2025 — A critical technical crisis is quietly unfolding across the web as artificial intelligence-powered search engines and chatbots struggle to read content from JavaScript-heavy websites. While Google’s traditional crawler has evolved to handle client-side rendering, major AI platforms including ChatGPT, Claude, and Perplexity cannot execute JavaScript, effectively rendering millions of modern websites invisible to the next generation of search technology.

Table of Contents

Toggle

The Scale of the JavaScript Problem

The issue affects a vast portion of the modern web. Most AI crawlers, including ChatGPT, can’t read JavaScript—unlike Google, which renders it through sophisticated infrastructure. OpenAI’s GPTBot and ChatGPT’s browsing tool do not execute JavaScript. They don’t run scripts. They don’t wait for data-fetching. They don’t interact with buttons or menus. What they see is the raw initial HTML of the page—and nothing more.

This limitation creates a fundamental disconnect between what users see on websites and what AI systems can access. If your page requires a browser to render correctly, ChatGPT won’t see it. It’s not a browser. This extends to other major AI platforms, creating a systematic blindness to JavaScript-dependent content.

Real-World Case Study: Complete AI Invisibility

A comprehensive case study conducted by Glenn Gabe of GSQi revealed the stark reality of this problem. Analyzing a site that relies heavily on JavaScript rendering to display content, where the entire site is client-side rendered, the results were alarming:

ChatGPT Testing Results:

- Could not read the content of JavaScript-rendered pages

- Failed to find content for every URL tested

- Often displayed incorrect favicons (showing default generic favicons instead of the site’s actual favicon)

- Responses typically ended with explanations that the content could not be retrieved

Perplexity Analysis:

- Similar to ChatGPT, explained it could not read the content of the page

- Sometimes received “Access Denied” errors despite no blocking in robots.txt

- Failed to find content for every URL tested

- Also showed incorrect favicon problems

Claude Results:

- Could not find content based on JavaScript rendering

- Explained it wasn’t able to retrieve content from pages

- Could not display any text from JavaScript-dependent pages

The Technical Divide: Google vs. AI Crawlers

The contrast between Google’s capabilities and AI crawlers reveals a significant technological gap:

| Google’s Approach | AI Crawlers’ Limitations |

|---|---|

| Advanced JavaScript rendering | No JavaScript execution |

| Web Rendering Service (WRS) | Basic HTML-only reading |

| Headless Chromium browser | Simple HTTP requests |

| Deferred rendering capability | Immediate content requirements |

| Resource-intensive processing | Lightweight scanning approach |

Google is the exception. Google have been around for a long time and crawling is at the core of what they do. It’s difficult to scrape the web and its dynamic content if you cannot render javascript, as one expert noted.

Industry Impact and Warning Signs

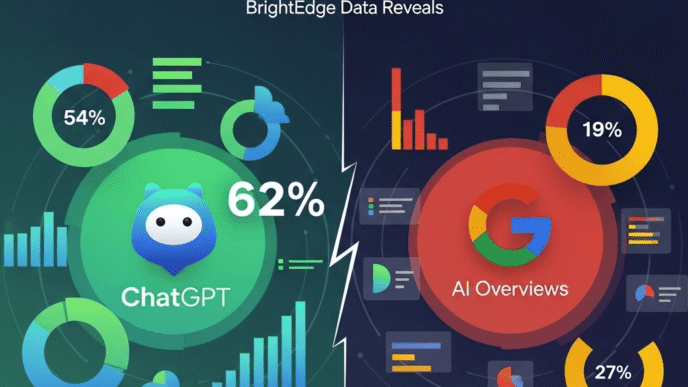

The implications extend far beyond individual websites. Major AI crawlers, such as GPTBot and Claude, have distinct preferences for content consumption. GPTBot prioritizes HTML content (57.7%), while Claude focuses more on images (35.17%). This preference structure means JavaScript-dependent sites are systematically disadvantaged.

Early Warning Indicators

Businesses can identify if they’re affected by checking for these warning signs:

- Favicon Problems: AI platforms showing generic favicons instead of actual site favicons

- Citation Failures: Sites not appearing as official sources in AI responses, even for content explicitly about the site

- Content Invisibility: AI tools claiming they “cannot access” or “cannot find” content from specific URLs

Essential Solutions for AI Visibility

Organizations must implement specific technical strategies to ensure AI crawler compatibility:

1. Implement Server-Side Rendering (SSR)

Render your content on the server so it appears in the initial HTML response. Frameworks like Next.js, SvelteKit, and Nuxt make this achievable. Since AI crawlers can’t execute client-side JavaScript, server-side rendering is essential for ensuring visibility.

2. Use Static Site Generation (SSG)

If your content is stable, use static site generation. Tools like Astro, Hugo, and Gatsby are built for this approach and ensure content is immediately available in HTML.

3. Ensure Real URL Structure

If your page routing is handled purely client-side, AI bots can’t follow it. Ensure that every major page exists as a real URL returning full HTML rather than relying on JavaScript routing.

4. Test Without JavaScript

Disable JavaScript in your browser and load your pages. If the main content isn’t there, AI crawlers won’t see it either. This simple test reveals exactly what AI systems can access.

5. Implement Dynamic Rendering

Use dynamic rendering solutions that detect AI crawlers and serve them static HTML versions while maintaining the JavaScript experience for users. Tools like Prerender.io specifically address this challenge.

The Business Impact: Traffic and Revenue at Risk

The stakes are considerable. Companies like Ahrefs recently stated that they’ve received 14,000+ customers from ChatGPT alone. If your content isn’t being featured in LLMs, this could wreak havoc on the discoverability of your site.

Sites particularly vulnerable include:

- Large Enterprise Sites: More complex infrastructure often relies heavily on JavaScript

- E-commerce Platforms: Product catalogs and dynamic pricing frequently use client-side rendering

- SaaS Applications: User interfaces built as Single Page Applications (SPAs)

- Content Sites with Dynamic Features: Sites using JavaScript for content loading and user interactions

Google’s Warning and Industry Response

Google itself has issued warnings about excessive JavaScript use, highlighting potential blind spots for AI search crawlers. Martin Splitt from Google elaborated on the balance between traditional websites and web applications, emphasizing the necessity of careful JavaScript usage.

“The excitement around JavaScript can lead to its overuse, which may not be practical for every element of a website.”

This warning takes on new significance in the context of AI search, where over-reliance on JavaScript limits site visibility.

Expert Perspectives: The Rendering Revolution

Industry experts are calling for a fundamental shift in development approaches:

“AI crawlers’ technology is not as advanced as search engine bots crawlers yet. If you want to show up in AI chatbots/LLMs, it’s important that your Javascript can be seen in the plain text (HTML source) of a page. Otherwise, your content may not be seen.”

— Peter Rota, SEO Consultant

The consensus is clear: the traditional “JavaScript-first” development strategy needs reevaluation in light of AI crawler limitations.

The Future: Hybrid Approaches and Technical Evolution

The solution isn’t abandoning JavaScript entirely but adopting smarter implementation strategies:

Hybrid Rendering Models:

- Initial page load uses server-side rendering for AI compatibility

- Subsequent interactions use client-side rendering for user experience

- Progressive enhancement ensures functionality across all user agents

Progressive Enhancement:

- Core content delivered in HTML

- JavaScript adds interactivity without being essential for content access

- Graceful degradation ensures basic functionality without JavaScript

Framework Evolution: Modern frameworks are adapting to address these challenges. Next.js, Nuxt, and similar platforms now offer built-in solutions for hybrid rendering, making it easier to serve both human users and AI crawlers effectively.

Looking Ahead: The AI-First Web

As AI search continues to grow—with AI crawlers now accounting for billions of requests and rivaling as much as 20% of Googlebot’s crawl activity—the pressure to optimize for AI visibility will only intensify.

Key Predictions for 2026:

- AI Crawler Evolution: AI platforms may develop more sophisticated JavaScript rendering capabilities

- Development Standard Shifts: Server-side rendering will become the default for content-dependent sites

- Framework Innovation: New tools will emerge specifically designed for AI-compatible web development

- SEO Strategy Revolution: Traditional SEO will expand to include “AIO” (AI Optimization) practices

My Analysis: The JavaScript invisibility crisis represents more than a technical challenge—it’s a fundamental shift requiring new development paradigms. Organizations that proactively address this issue by implementing hybrid rendering strategies will maintain visibility across both traditional search and AI platforms. Those that don’t risk becoming invisible to the next generation of information discovery tools, potentially losing significant traffic and business opportunities.

Conclusion: Adapt or Become Invisible

The emergence of AI-powered search represents both a crisis and an opportunity for web developers and businesses. While JavaScript-heavy sites face unprecedented invisibility risks, the solutions are achievable for organizations willing to invest in technical modernization.

The key is recognizing that AI crawlers aren’t just another search engine—they represent a fundamentally different approach to content consumption that requires websites to be accessible at the HTML level. Sites that adapt to this reality by implementing server-side rendering, static generation, or hybrid approaches will thrive in the AI-first web. Those that don’t may find themselves increasingly invisible as AI-powered search continues to grow.

The choice is clear: evolve your rendering strategy now, or risk disappearing from the next generation of search results. In a world where AI increasingly mediates information discovery, being invisible to AI crawlers isn’t just a technical problem—it’s an existential business threat.

As AI search technology continues to evolve, the fundamental requirement remains constant: content must be accessible in HTML to be discoverable. Organizations that understand and act on this principle will secure their position in the AI-driven future of web search and discovery.