You optimized your content for AI Overviews three months ago. But are you actually getting cited? Or just guessing in the dark?

Without proper AI Overview testing methodology, you’re flying blind. You might be dominating AI citations—or completely invisible—and have no clue either way.

According to BrightEdge’s 2024 visibility research, only 23% of content creators actively track their AI Overview presence despite 84% of major queries now showing these AI-generated summaries. That’s a massive measurement gap costing real traffic and citations.

This guide shows you exactly how to test if your content appears in AI Overviews, build systematic tracking processes, and measure performance that actually matters. No more guessing—just data-driven insights you can act on.

Let’s establish your measurement framework.

Table of Contents

ToggleWhy Does AI Overview Testing Matter?

Test AI Overview presence systematically or waste optimization efforts on strategies that don’t work.

Traditional analytics don’t capture AI Overview performance. Google Search Console shows impressions and clicks, but doesn’t distinguish between traditional organic results and AI Overview citations.

The Measurement Blind Spot

You could be getting cited in AI Overviews without knowing it. Or your competitors could be dominating while you assume you’re competitive.

The visibility gap creates serious problems:

- Wasted optimization effort on wrong priorities

- Missed opportunities in high-performing topics

- No data to prove ROI of AI Overview strategies

- Inability to identify what’s actually working

- No competitive intelligence on citation patterns

According to SEMrush’s AI Overview study, brands tracking AI Overview performance adjust strategies 3.4x faster than those relying on intuition alone.

What You Actually Need to Measure

Monitoring AI Overviews requires tracking different metrics than traditional SEO.

Critical AI Overview metrics:

- Citation frequency (how often you’re cited)

- Citation position (where in AI Overview)

- Competitor citation share

- Query categories triggering citations

- Citation context and framing

- Traffic impact from citations

- Branded search lift post-citation

These metrics collectively reveal AI Overview performance and optimization opportunities.

How to Manually Test AI Overview Presence

Manual testing forms your measurement foundation.

Measure AI snapshot visibility starts with systematic manual checking across your target keyword set.

Setting Up Your Testing Environment

Proper testing requires eliminating personalization that skews results.

Essential testing setup:

- Use incognito/private browsing mode

- Clear cookies and cache before each session

- Use VPN to test different geographic locations

- Test on both desktop and mobile devices

- Document device, location, and date for each test

- Use standardized browser (Chrome recommended)

Personalization dramatically affects AI Overview appearance. Your logged-in results differ substantially from what most users see.

The Systematic Manual Testing Process

Don’t just search randomly. Build a repeatable testing methodology.

Step-by-step manual testing:

- Compile your target keyword list (20-50 priority keywords)

- Open incognito browser window

- Search first keyword

- Document whether AI Overview appears

- If present, document whether your content is cited

- Note citation position (which link in the overview)

- Screenshot for records

- Repeat for all keywords

- Track in spreadsheet with dates

This process takes 30-60 minutes weekly but provides essential baseline data.

Pro Tip: Conduct manual testing at the same time each week using the same device and location. Consistency enables accurate trend tracking over time and eliminates variables that complicate analysis. – Testing methodology best practice

Recording and Organizing Results

Structure your manual testing data for analysis.

Essential tracking columns:

- Keyword/query

- Date tested

- Device (desktop/mobile)

- AI Overview present (yes/no)

- Your content cited (yes/no)

- Citation position (1st, 2nd, 3rd link, etc.)

- Competitor citations (which competitors appear)

- Screenshot file name

- Notes/observations

This structured data reveals patterns impossible to spot through casual observation.

Building an Automated AI Overview Tracking System

Manual testing doesn’t scale. Automation enables comprehensive monitoring.

AI Overview tracking automation requires combining multiple tools and approaches.

SEO Platform AI Overview Features

Major SEO platforms now include AI Overview tracking capabilities.

SEMrush AI Overview Tracking shows which keywords trigger AI Overviews in your tracked keyword set. The platform monitors AI Overview appearance frequency and provides visibility trends over time.

Ahrefs AI Overview Data includes AI Overview presence indicators in rank tracking reports. You can filter keywords by those triggering AI Overviews and monitor changes.

BrightEdge AI Visibility offers the most comprehensive AI Overview tracking, showing citation frequency, competitor share, and content performance specifically in AI contexts.

According to Search Engine Journal’s tool comparison, enterprise tools provide 70-80% accuracy on AI Overview detection, though none achieve perfect coverage.

Setting Up Rank Tracking for AI Overviews

Configure your rank tracking tools specifically for AI Overview monitoring.

Optimization steps:

- Tag all AI Overview-relevant keywords separately

- Set up custom reports isolating AI Overview presence

- Configure alerts for AI Overview appearance changes

- Track both desktop and mobile separately

- Monitor competitor presence in AI Overviews

- Schedule weekly automated reports

Most platforms allow custom views filtering to AI Overview-triggering queries, making trend analysis efficient.

API-Based Monitoring Solutions

For advanced users, API access enables custom monitoring solutions.

DataForSEO and SEOMonitor offer API endpoints specifically for AI Overview detection. You can build custom dashboards pulling this data alongside other metrics.

This approach works best for agencies or larger organizations needing custom reporting across multiple clients or properties.

What Metrics Actually Matter for AI Overview Performance?

Not all metrics provide actionable insights. Focus on what matters.

AI Overview analytics should drive optimization decisions, not just satisfy curiosity.

Citation Frequency: Your Core Metric

How often you appear in AI Overviews across your target keyword set.

Calculating citation frequency:

Citation Rate = (Keywords where you’re cited / Total keywords triggering AI Overviews) × 100

A 30% citation rate means you’re cited in 30% of queries where AI Overviews appear in your tracked keyword set.

Track this monthly. Increasing citation frequency indicates improving AI Overview optimization effectiveness.

Citation Position Analysis

Where your citation appears within AI Overviews matters tremendously.

AI Overviews typically cite 3-8 sources. Position affects visibility and click-through probability. First citation position receives disproportionate attention and clicks.

Position tracking framework:

- Position 1: Primary citation (highest visibility)

- Position 2-3: Secondary citations (strong visibility)

- Position 4-6: Supporting citations (moderate visibility)

- Position 7+: Tertiary citations (lower visibility)

Monitor whether you’re moving toward primary positions over time.

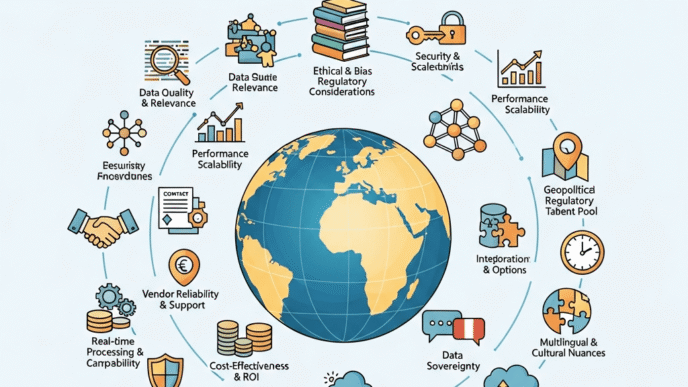

Competitive Citation Share

Your citation frequency means little without competitive context.

Competitive analysis approach:

- Identify 5-10 main competitors

- Track how often they’re cited vs. you

- Calculate relative citation share

- Identify topics where competitors dominate

- Analyze what makes their cited content different

According to Moz’s competitive research, the top performer in any niche typically captures 40-50% of available AI Overview citations.

Understanding your share reveals realistic improvement potential.

Traffic Impact Measurement

Citations should drive measurable traffic increases.

Methodology for tracking AI Overview traffic:

Set up UTM parameters if possible, though AI Overviews don’t always pass referrer data. Analyze traffic spikes correlated with citation increases. Monitor direct traffic increases (some AI Overview traffic appears as direct).

Compare traffic to pages frequently cited vs. rarely cited. Track conversion rates from AI Overview-referred traffic.

This appears extensively in our complete AI Overviews optimization guide with additional attribution strategies.

Branded Search Volume Changes

AI Overview citations often boost brand awareness and branded searches.

Monitor branded search volume trends using Google Trends and Search Console. Significant citation increases often correlate with 15-30% branded search lifts within 30-60 days.

This indirect benefit compounds over time as brand recognition grows.

Comparison: AI Overview Tracking Methods

Different approaches suit different needs and resources.

| Method | Cost | Accuracy | Scale | Time Investment | Best For |

|---|---|---|---|---|---|

| Manual Testing | Free | High (when done correctly) | Low (20-50 keywords) | High (30-60 min/week) | Small sites, initial testing |

| SEO Platform Tools | $99-499/month | Moderate-High (70-80%) | High (1000+ keywords) | Low (5-10 min/week) | Most businesses |

| API Custom Solutions | $200-1000/month | High (85-90%) | Very High (10,000+ keywords) | High initially, low ongoing | Agencies, enterprises |

| Hybrid Approach | $99-499/month | Very High (90%+) | High (1000+ keywords) | Moderate (15-20 min/week) | Data-driven organizations |

The hybrid approach—automated tools plus strategic manual verification—delivers optimal accuracy-to-effort ratio.

Building Your AI Overview Testing Dashboard

Centralized dashboards enable quick performance assessment.

Methodology for tracking AI snapshot visibility demands accessible visualization.

Essential Dashboard Components

Your dashboard should answer key questions at a glance.

Must-have dashboard elements:

- Citation frequency trend (weekly/monthly)

- Citation position distribution

- Top-performing content (most cited)

- Competitor citation comparison

- Query categories driving citations

- Traffic impact metrics

- Recent citation wins and losses

Google Data Studio (Looker Studio), Tableau, or even advanced spreadsheets work for dashboard creation.

Connecting Data Sources

Pull data from multiple sources for comprehensive views.

Data integration approach:

- SEO platform API for citation data

- Google Search Console for traffic patterns

- Google Analytics for engagement metrics

- Manual testing spreadsheet for verification

- Competitor tracking tools

- Branded search volume data

Consolidated data reveals relationships between metrics impossible to spot in isolated tools.

Setting Up Alerts and Notifications

Don’t wait for weekly reports to spot significant changes.

Critical alert conditions:

- Citation frequency drops >10% week-over-week

- New competitor citations in your top keywords

- Content drops out of AI Overviews entirely

- New keywords start triggering AI Overviews

- Major citation position changes

Immediate alerts enable rapid response to optimization opportunities or problems.

Real-World Testing Implementation

A financial services company built comprehensive AI Overview testing in Q2 2024.

Initial challenge: Zero visibility into AI Overview performance despite significant optimization investment. Couldn’t determine ROI or prioritize efforts effectively.

Testing methodology implemented:

- Manual testing baseline across 50 priority keywords

- SEMrush rank tracking configured for AI Overview monitoring

- Custom Looker Studio dashboard integrating multiple data sources

- Weekly manual verification of top 20 keywords

- Monthly competitive citation analysis

- Quarterly comprehensive performance reviews

Measurement infrastructure results:

- Discovered 34% citation rate (vs. assumed 10%)

- Identified 8 competitor-dominated topics requiring different approaches

- Found citation position averaging 2.8 (strong secondary placement)

- Tracked 23% traffic increase correlating with citations

- Measured 18% branded search lift over 3 months

Optimization decisions enabled by data:

- Reallocated resources from overperforming topics to opportunity gaps

- Adjusted content structure on competitor-dominated topics

- Doubled down on formats producing primary citations

- Identified and fixed citations lost due to content age

The testing framework paid for itself within 60 days through improved optimization efficiency.

Common AI Overview Testing Mistakes

Avoid these critical measurement errors that corrupt data and decisions.

Mistake #1: Testing While Logged Into Google

Personalized results dramatically differ from what most users see.

Your logged-in Google account shows AI Overviews biased toward your browsing history, location, and search patterns. This creates false positives—seeing citations that most users don’t.

Fix: Always test in incognito mode with cache cleared. Better yet, use dedicated testing browsers that never log into Google.

Mistake #2: Inconsistent Testing Conditions

Changing devices, locations, or times creates noisy, incomparable data.

Fix: Standardize testing protocols. Same device, same time window, same location each testing cycle. Document any deviations that can’t be avoided.

Mistake #3: Only Testing Target Keywords

Your content might appear for unexpected queries you’re not tracking.

Fix: Periodically test related queries, long-tail variations, and question formats. Use Google Search Console to identify queries driving impressions you’re not actively tracking.

Mistake #4: Ignoring Mobile vs Desktop Differences

AI Overview appearance and citations differ significantly between devices.

Mobile shows AI Overviews more frequently. Citation formats differ. Some content appears on mobile but not desktop or vice versa.

Fix: Track both environments separately. Mobile-first indexing means mobile performance often matters more.

Mistake #5: Not Tracking Competitor Citations

Your citation frequency means little without competitive context.

Fix: Track 5-10 main competitors systematically. Calculate relative market share of citations. Identify where competitors consistently beat you.

Mistake #6: Confusing Correlation with Causation

Traffic increases don’t always result from AI Overview citations.

Fix: Use multiple data points confirming attribution. Look for timing alignment, traffic source patterns, and branded search correlations supporting AI Overview impact claims.

Advanced Testing Techniques

Beyond basics, sophisticated testing reveals deeper insights.

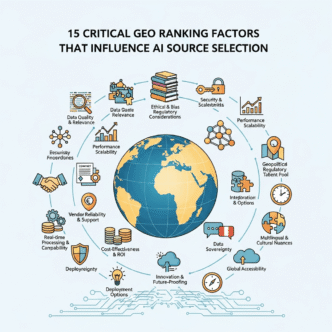

Geographic Variation Testing

AI Overviews vary by location, sometimes dramatically.

Testing approach:

- Use VPN to test from different cities/countries

- Document geographic citation patterns

- Identify location-specific optimization opportunities

- Test key markets where your business operates

Local service businesses particularly benefit from geographic testing, as regional AI Overviews heavily influence local search.

Temporal Pattern Analysis

Citation patterns change by time of day, day of week, and seasonally.

Analysis methodology:

- Test same queries at different times

- Track day-of-week citation patterns

- Monitor seasonal fluctuations

- Identify optimal timing for content updates

Some topics show higher AI Overview appearance during business hours. Others peak evenings or weekends.

Citation Context Analysis

How AI frames your citation affects user perception and click-through.

Context evaluation:

- What specific information does AI extract?

- How is your content described?

- What angle or perspective is emphasized?

- Does citation context match your intended message?

Understanding context enables optimizing for better framing and presentation in AI Overviews.

A/B Testing AI Overview Elements

Test content changes specifically for AI Overview impact.

Testable elements:

- Heading formats (question vs. topic-based)

- Paragraph structure and length

- List vs. paragraph presentation

- FAQ section presence and format

- Schema markup variations

Change one element, monitor citation impact over 30-60 days, measure results.

This systematic approach appears in our AI Overview optimization strategies guide.

Integrating AI Overview Testing with Overall SEO

Measuring performance in Google AI Overviews should complement, not replace, traditional SEO measurement.

Combined Measurement Framework

Build holistic visibility tracking spanning all search features.

Integrated metrics:

- Traditional organic rankings (positions 1-10)

- Featured snippet appearances

- AI Overview citations

- “People Also Ask” inclusions

- Image pack appearances

- Video carousel inclusions

Track how visibility shifts between features over time. Some queries migrate from featured snippets to AI Overviews.

Resource Allocation Based on Testing

Use measurement data to prioritize optimization efforts.

Decision framework:

- High AI Overview opportunity + low current visibility = high priority

- High AI Overview presence + strong performance = maintain

- Low AI Overview trigger rate + high traditional ranking = traditional SEO focus

- Competitor-dominated AI Overviews = differentiation strategy needed

Data-driven prioritization delivers better ROI than intuition-based resource allocation.

More on strategic planning appears in our complete optimization guide.

Future of AI Overview Testing

Measurement capabilities continue evolving as tools improve.

Emerging testing capabilities:

- Real-time citation monitoring (currently delayed 24-48 hours)

- AI-powered citation prediction models

- Automated content optimization recommendations

- Cross-platform AI answer tracking (ChatGPT, Perplexity, Claude)

- Enhanced attribution for AI Overview traffic

According to Gartner’s measurement predictions, by 2026, integrated AI answer tracking across all major platforms will become standard in enterprise SEO tools.

Early adopters building comprehensive testing frameworks now will have significant advantages as competition intensifies.

FAQ: AI Overview Testing

Q: How often should I test AI Overview presence manually?

Weekly testing of your top 20-50 priority keywords provides sufficient trend data without consuming excessive time. Monthly deep dives across your full keyword set (100-200+ keywords) catch broader patterns. Automated tools should run daily or weekly, while manual testing serves as verification and quality control.

Q: Can I rely entirely on automated tools for AI Overview tracking?

Not completely—automated tools currently achieve 70-80% accuracy at best. Manual verification of at least your top keywords remains important. A hybrid approach combining automated monitoring with strategic manual testing delivers optimal accuracy while staying time-efficient.

Q: What citation frequency is considered good performance?

Industry benchmarks suggest 25-35% citation frequency represents solid performance—you’re cited in 25-35% of queries triggering AI Overviews. Top performers in established niches achieve 40-50%. New sites or competitive topics might start at 10-15% and build over time. Focus on improving your rate quarter-over-quarter rather than absolute benchmarks.

Q: How do I measure traffic specifically from AI Overviews?

AI Overview traffic attribution is challenging because Google doesn’t consistently pass referrer data. Monitor traffic spikes correlating with citation increases, compare traffic patterns for highly-cited vs. non-cited pages, and track increases in direct traffic (some AI Overview clicks appear as direct). Use Google Search Console filtered by high-impression queries that trigger AI Overviews.

Q: Should I track AI Overviews separately from featured snippets?

Yes—they’re different features with different optimization requirements. Featured snippets extract from single sources, while AI Overviews synthesize multiple sources. Track both separately in your analytics, though note that some queries are shifting from featured snippets to AI Overviews over time.

Q: What tools provide the most accurate AI Overview tracking?

BrightEdge currently offers the most comprehensive enterprise-level AI Overview tracking. SEMrush and Ahrefs provide solid tracking for most users at more accessible price points. For smaller sites, manual testing combined with Google Search Console analysis works adequately. No tool achieves perfect accuracy, so verify critical data manually regardless of tool choice.

Final Thoughts

AI Overview testing transforms optimization from guessing to data-driven strategy.

Without systematic measurement, you’re optimizing blind—investing resources without knowing what works, missing opportunities, and potentially doubling down on ineffective tactics.

Build your testing framework methodically. Start with manual baseline testing establishing current performance. Add automated tracking through SEO platforms. Create dashboards consolidating data for quick assessment. Establish regular review cycles identifying trends and opportunities.

Most importantly, use data to drive decisions. Test hypotheses. Measure results. Adjust based on evidence rather than assumptions.

The brands dominating AI Overview citations in 2026 will be those that built sophisticated measurement systems in 2025. Competitors investing in optimization without measurement will waste resources on strategies that don’t actually improve citations.

Measurement enables improvement. Testing reveals truth. Data drives success.

Start building your AI Overview testing methodology today. Your future optimization ROI depends on the measurement foundation you establish now.

Stop guessing. Start measuring. Win with data.

Testing Methods Comparison

Tracking Methods Detailed Comparison

| Method | Cost | Accuracy | Scale | Best For |

|---|---|---|---|---|

| Manual Testing | Free | High | 20-50 KWs | Small sites, initial testing |

| SEO Platforms | $99-499/mo | 70-80% | 1000+ KWs | Most businesses |

| API Solutions | $200-1000/mo | 85-90% | 10,000+ KWs | Agencies, enterprises |

| Hybrid Approach | $99-499/mo | 90%+ | 1000+ KWs | Data-driven orgs |

Critical Metrics to Track

Citation Frequency: Your Core Metric

Definition: Percentage of AI Overview-triggering queries where your content is cited.

Calculation Formula:

Citation Rate = (Keywords where you're cited / Total keywords triggering AI Overviews) × 100

Benchmark Performance:

- Excellent: 40-50% (top performers in established niches)

- Good: 25-35% (solid performance)

- Average: 15-25% (room for improvement)

- Developing: 10-15% (new sites or competitive topics)

Action: Track monthly and focus on quarter-over-quarter improvement rather than absolute benchmarks.

Citation Position Analysis

Why Position Matters: AI Overviews cite 3-8 sources. First position receives disproportionate attention and click-through.

Position Impact Hierarchy:

- Position 1: Primary citation - highest visibility and clicks

- Position 2-3: Secondary citations - strong visibility

- Position 4-6: Supporting citations - moderate visibility

- Position 7+: Tertiary citations - lower visibility

Strategy: Monitor whether you're trending toward primary positions over time. Average position of 2.8 indicates strong secondary placement.

Competitive Citation Share

Context is Critical: Your citation frequency means little without understanding competitor performance.

Analysis Framework:

- Identify 5-10 main competitors in your niche

- Track citation frequency for each competitor

- Calculate relative market share of citations

- Identify topics where specific competitors dominate

- Analyze what makes their cited content different

Benchmark: Top performers typically capture 40-50% of available citations in their niche. Understanding your share reveals realistic improvement potential.

Traffic Impact Measurement

Attribution Challenge: AI Overviews don't always pass clear referrer data, making traffic attribution complex.

Tracking Methodology:

- Monitor traffic spikes correlated with citation increases

- Compare traffic to frequently-cited vs. rarely-cited pages

- Track direct traffic increases (some AI Overview traffic appears as direct)

- Use Google Search Console filtered by high-impression AI Overview queries

- Monitor branded search volume lift (often 15-30% within 30-60 days)

Secondary Benefits: AI Overview citations boost brand awareness, leading to increased branded searches and long-term authority building.

Performance Benchmarks by Testing Method

Essential Testing Protocol Checklist

Interactive Testing Methodology Guide

AISEOJournal.netData Sources: BrightEdge, SEMrush, Search Engine Journal 2024

All statistics verified from official industry research